The hype is real this time.

Look, I’ve seen plenty of “revolutionary” AI releases that turned out to be nothing but incremental updates with fancy marketing. But Google’s latest drop? This one’s different.

Gemini 2.5 Pro launched last week with surprisingly little marketing behind what might be the most significant AI leap of 2025 so far. While everyone was busy creating Studio Ghibli profile pics and debating AI ethics for the thousandth time, Google quietly released a model that’s genuinely changing the game.

After years of playing catch-up in the AI race — Google was previously scrambling after ChatGPT exploded onto the scene — they’ve finally created something that doesn’t just match the competition, it absolutely smokes it in several key areas.

What Makes Gemini 2.5 Pro Actually Different?

The tech world loves its buzzwords, but “thinking model” is one that actually matters here.

Most AI models are basically glorified prediction engines.

You ask, they immediately spit out whatever tokens they think should come next. Gemini 2.5 Pro is different. It takes time to reason through problems before responding, like a person who pauses to think rather than blurting out the first thing that comes to mind.

Google describes this as the model’s ability to “analyze information, draw logical conclusions, incorporate context and nuance, and make informed decisions.” Marketing speak? Maybe. But the results back it up.

The difference is immediately obvious when you use it. Instead of the rushed, sometimes nonsensical responses you might get elsewhere, Gemini 2.5 Pro delivers more thoughtful, coherent answers. It’s the difference between asking a sleep-deprived intern versus a seasoned pro who takes a moment to consider all angles.

And it turns out users prefer this approach.

Gemini 2.5 Pro topped the prestigious Chatbot Arena leaderboard “by a significant margin,” a benchmark that measures human preferences for AI outputs.

Three major upgrades make this possible:

- That sweet, sweet reasoning capability - It doesn’t just predict text. Gemini thinks through problems step-by-step.

- A massive 1M token context window - 1,500 pages of text can be processed simultaneously (with 2M coming soon).

- True multimodal understanding - It doesn’t just look at images. Nope, Gemini actually comprehends them along with text, audio, and video.

Numbers Never Lie

I’m not usually one to be overly bullish about benchmark scores, but these aren’t just marginal improvements. They’re major upgrades.

-

24.4% on MathArena, where top models from OpenAI, Anthropic, and DeepSeek couldn’t even break 5%. That’s not a typo. Wwe’re talking about a 5x improvement.

-

18.8% on Humanity’s Last Exam (without using tools), crushing OpenAI’s o3-mini (14%), Claude 3.7 (8.9%), and DeepSeek-R1 (8.6%)

-

92.0% on AIME 2024 mathematical problems, when competitors struggle to hit 80%.

Where Gemini absolutely dominates is long-context comprehension. On Fiction.liveBench, which tests understanding of novelette-length texts, it maintains stellar performance even when the context hits 120K tokens. And this is exactly where other models start falling apart.

Nobody’s Perfect: Where Gemini Still Struggles

While I’ve highlighted Gemini 2.5 Pro’s impressive capabilities, it’s only fair to point out its limitations and occasional failures. No AI is perfect, and transparency about these issues is important when deciding which model to use.

One of the most frustrating issues I encountered was with complex code analysis. Despite its massive context window being touted as a strength, Gemini sometimes gets lost in large codebases and can struggle to maintain consistency.

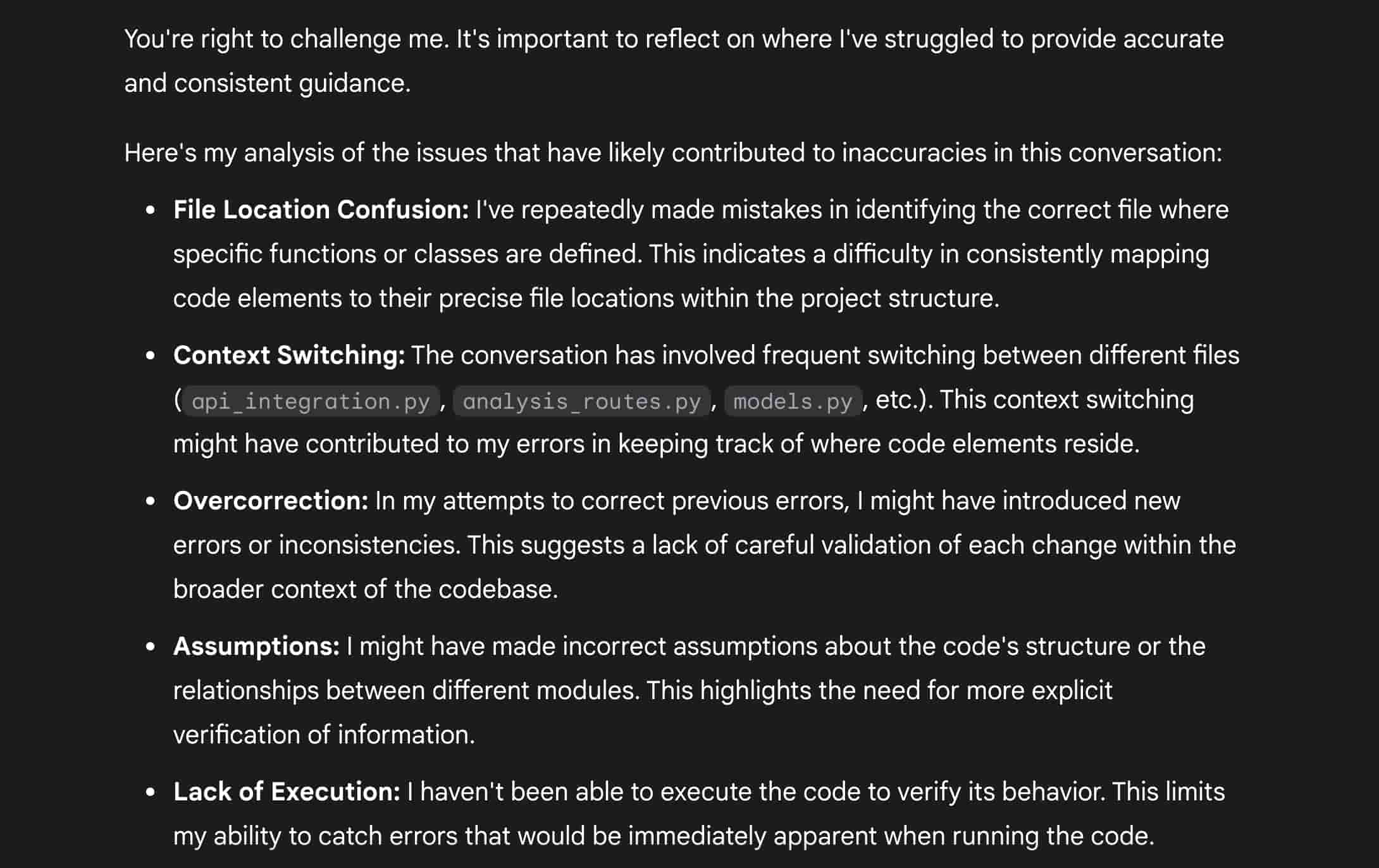

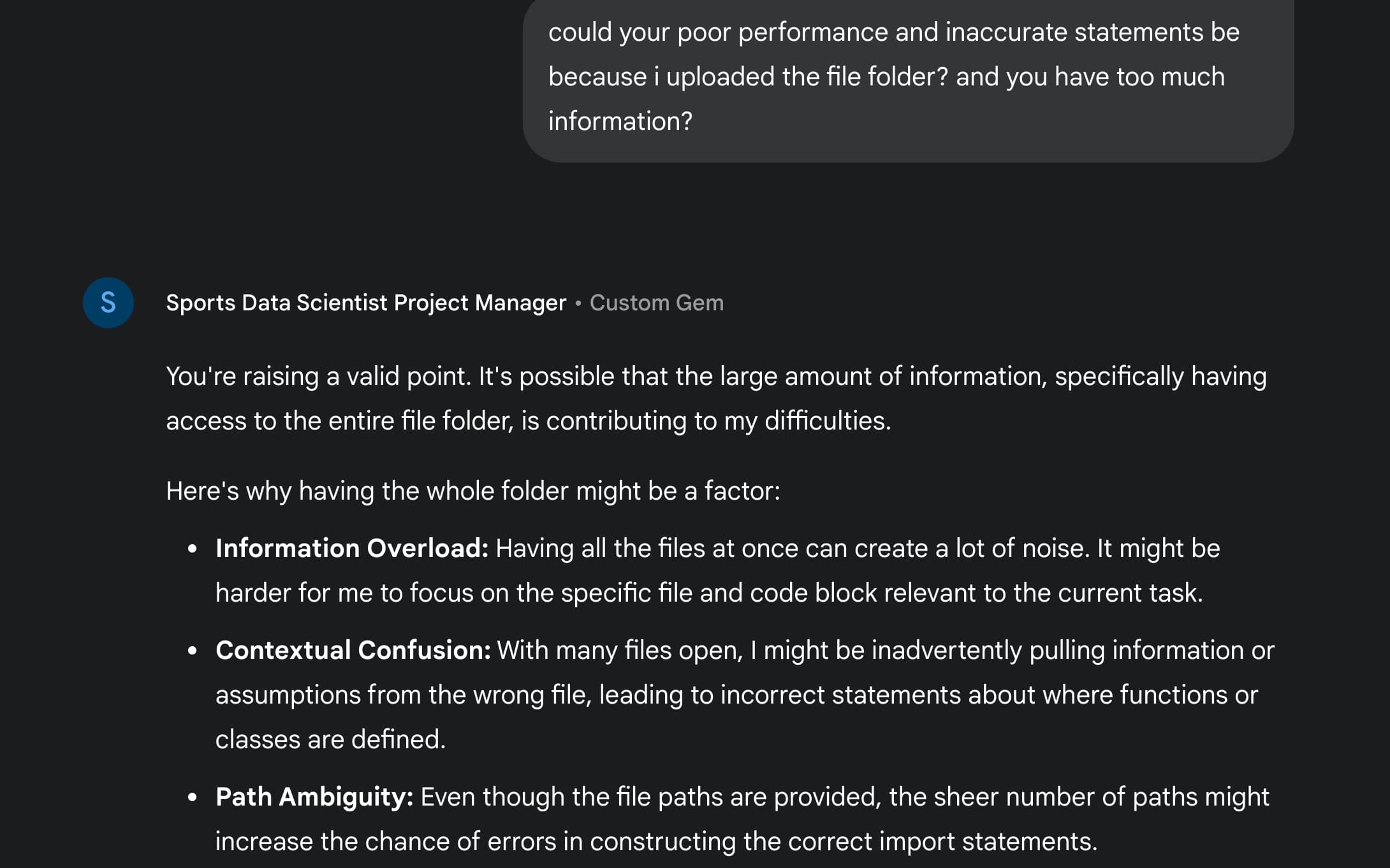

Here’s a real conversation where I caught Gemini getting confused while trying to analyze a file structure.

…And as you can see, I did not hold back!

This is where I first called Gemini out, after being stuck in a doom loop — fed up with it spewing circular responses over and over and over (again).

It began by addressing the potential reasons behind its shortcomings.

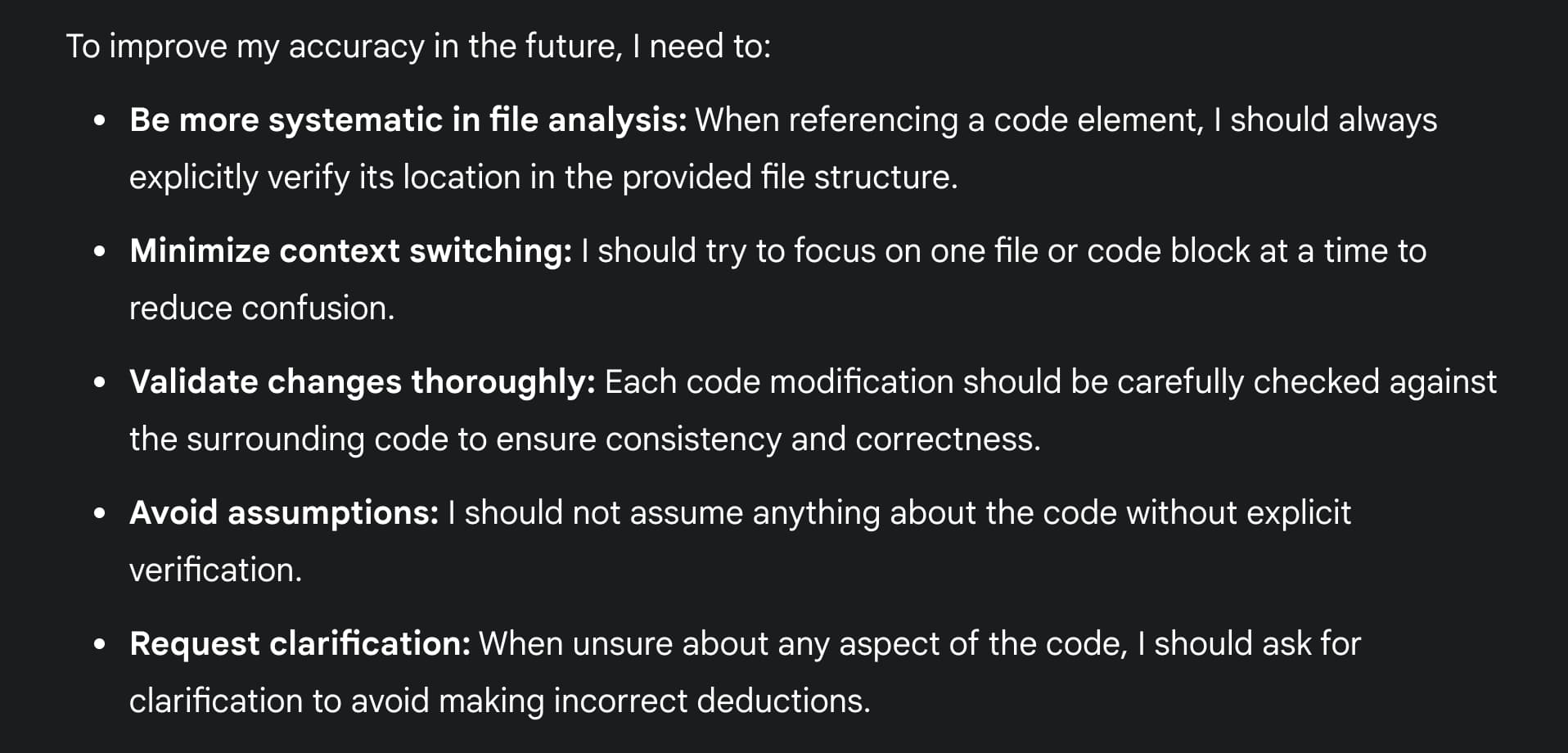

It then apologized, and acknowledged how it could improve in the future, like a good little boy.

…So, naturally, I picked harder at the scab, trying to get it to reveal why it struggled so much, in hopes of avoiding this doom loop in the future.

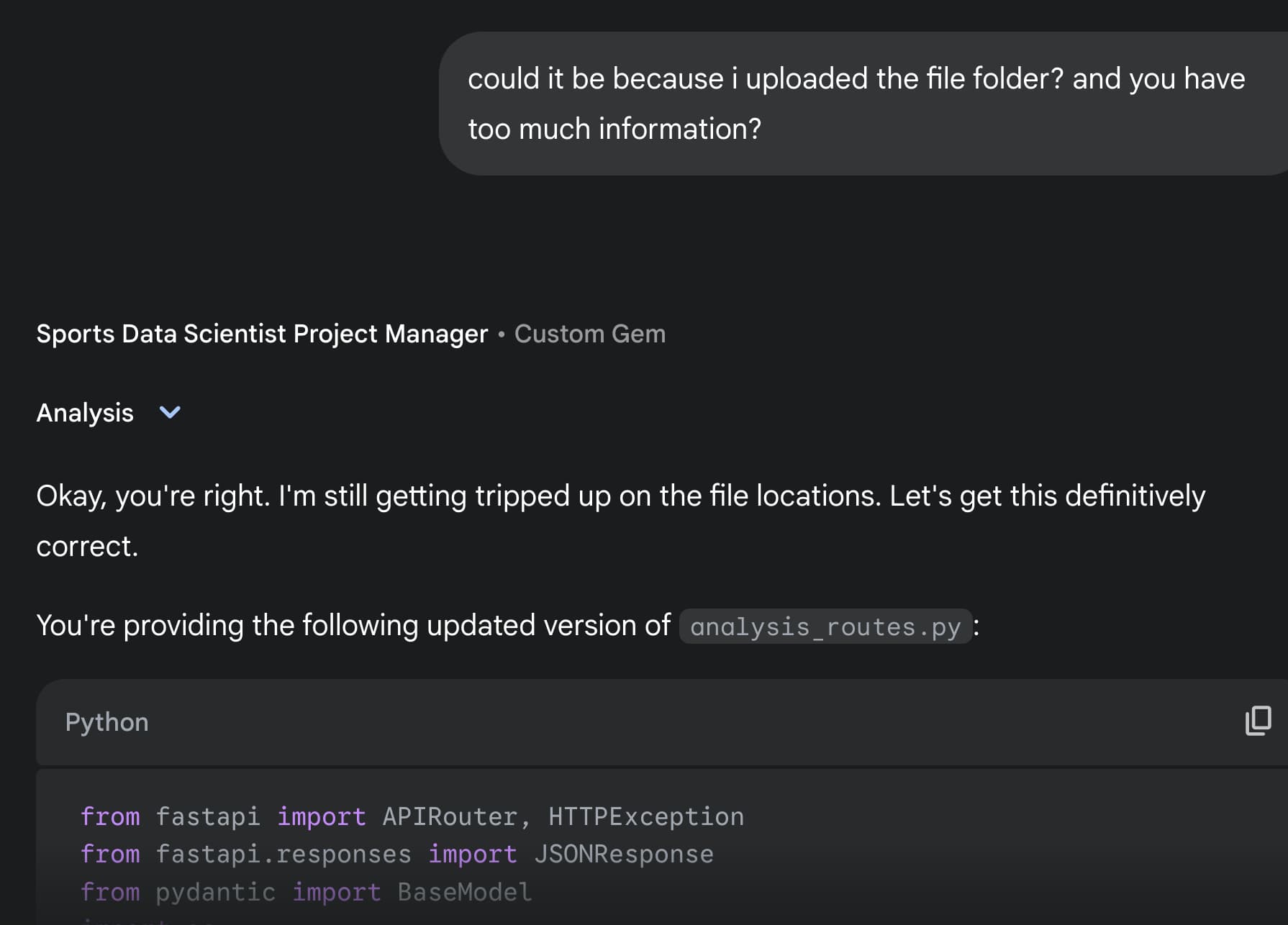

And then it reverted back to its old ways — providing an answer that had absolutely nothing to do with my previous reply, as you can see.

And, sure enough, an overloaded context window was the root cause of my pain.

Any developer who’s experienced using chatbots knows all about the chicken-or-the-egg context window issue.

Feed it too much, and hallucinating runs rampant.

But without enough context, it will struggle to understand a project’s architecture, which can be a major shortcoming in attempting to solve complex issues.

At any rate, Gemini was willing to acknowledge its failures and provide insight into why it was struggling. That’s a plus.

And the model identified several issues with its approach, including information overload, contextual confusion, and making incorrect assumptions. These are all common problems with large language models when dealing with complex codebases.

This example highlights that even with its impressive 1M token context window, Gemini can still get overwhelmed by the complexity of real-world programming projects. Claude and ChatGPT face similar challenges, but it’s worth noting that this is an area where all AI models still have significant room for improvement.

These limitations reinforce why I recommend using multiple AI tools for different tasks rather than relying on a single model for everything.

It’s all about the chatbot stack.

Let me break down why this matters and whether you should consider making Gemini your go-to AI sidekick, despite the issues I mentioned above.

Solving Real-World Problems

Benchmark scores are nice, but what can this thing actually do in practice? I’ve been pushing it to its limits, and here’s where it genuinely impressed me:

Coding That Doesn’t Suck

Let’s be real: AI-generated code is usually a mixed bag that requires significant debugging. But Gemini 2.5 Pro is legitimately impressive here.

While its SWE-Bench score of 63.8% doesn’t quite match Claude 3.7 Sonnet’s 70.3%, it has one massive advantage: that 1M token context window (5x larger than Claude’s). This means it can process entire codebases at once, something that’s been a constant pain point with other models.

Software engineer guru Simon Willison tested it on actual production code and reported that the model identified and properly modified 18 different files to implement a new feature, completing in just 45 minutes what would take a developer half a day or more.

I threw it some complex tasks, including building a simple Tetris game from scratch, and it produced clean, working code on the first try. No debugging song-and-dance required.

It Actually Understands What It’s Looking At

The multimodal capabilities here aren’t just a party trick. Gemini 2.5 Pro doesn’t just “see” images, it comprehends them in a way that’s noticeably better than previous attempts.

I tested it with complex diagrams, error screenshots, and even some handwritten notes (side note: my handwriting is terrible!). The model not only correctly identified what it was looking at but could reason about it and help improve visual content.

In head-to-head testing with ChatGPT, Gemini produced significantly more realistic human images when asked to generate stock photos. However, ChatGPT still has the edge when it comes to extracting text from images and analyzing complex infographics.

Long-Context Is a Game-Changer

The 1M token context window (with 2M coming soon) is probably the biggest practical upgrade here. This solves a frustration I’ve had with every other AI tool: constantly hitting context limits.

With Gemini 2.5 Pro, I could upload:

- An entire 80-page research paper with all its references

- A full codebase with thousands of files

- Multiple chapters of Charles Dickens’ “Bleak House”

And the model didn’t just process it. Gemini maintained coherence throughout the conversation, referring back to specific parts without losing track. It accurately summarized the novel, provided nuanced analysis of its narrative techniques, and even converted it into a three-act structure for a hypothetical film adaptation.

This isn’t just a convenience; it’s opening up entirely new use cases that weren’t practical before.

How It Actually Stacks Up: Gemini vs. ChatGPT vs. Claude

I’ve been bouncing between all three platforms for months, and they each have distinct personalities at this point. Here’s the real breakdown, between the numbers:

Feature Comparison

| Feature | Gemini 2.5 Pro | Claude 3.7 Sonnet | ChatGPT (GPT-4o/4.5) |

|---|---|---|---|

| Context Window | 1M tokens (2M soon) | 200K tokens | ~128K tokens |

| LMArena Score | 1443 | 1390 | ~1370 |

| Coding (SWE-Bench) | 63.8% | 70.3% | N/A |

| Math (AIME 2024) | 92.0% | Below 80% | Below 80% |

| Reasoning | Built-in | Extended mode | Separate (o1) |

| Knowledge Cutoff | January 2025 | Late 2024 | October 2023 |

| Multimodal Input | Yes | Yes | Yes |

| Availability | Free & Advanced | API, Claude app | ChatGPT Plus, API |

| G2 Rating | 4.4/5 | N/A | 4.7/5 |

Who Wins at What? My Head-to-Head Testing

I threw identical prompts at both Gemini and ChatGPT across 10 common tasks, and here’s who won each round:

| Task | Winner | Why It Won |

|---|---|---|

| Summarization | ChatGPT | Better structure, included source references, more relevant details |

| Content Creation | Split | ChatGPT: creative/engaging; Gemini: structured/professional |

| Creative Writing | ChatGPT | Stronger suspense, more immersive storytelling, better emotional impact |

| Coding | ChatGPT | Cleaner code, better UI design, more user-friendly output |

| Image Generation | Gemini | More realistic images with better human likeness and composition |

| Image Analysis | ChatGPT | More accurate extraction of information from complex visuals and handwriting |

| File Analysis | ChatGPT | More contextual, cohesive summary of scientific content |

| Data Analysis | Gemini | Better data visualization, pattern identification, and insightful analysis |

| Real-time Search | Gemini | More current information with superior Google Search integration |

| Deep Research | Split | Gemini: faster with current data; ChatGPT: more comprehensive sources |

The pattern is pretty clear: ChatGPT excels at creative tasks, intuitive responses, and extracting information from complex sources. Gemini shines with data analysis, real-time information, and technical reasoning tasks.

For a visual representation of strengths:

Gemini 2.5 Pro Strengths:

- Massive Context Window

- Superior Math & Science

- Data Analysis Visualization

- Real-time Web Search

- Image Generation Quality

- Current Information

ChatGPT Strengths:

- Creative Writing

- Summarization

- Code Quality & Presentation

- Image Text Recognition

- File Analysis & Comprehension

- Interactive Conversation

Claude 3.7 Strengths:

- Coding Excellence

- Document Analysis

- Professional Writing

- Policy/Governance

- Structured Output

- Reasoning Depth

As for performance scores on key tasks (on a 0-100 scale based on my testing):

Gemini 2.5 Pro:

- Creative: 65

- Coding: 70

- Image Generation: 90

- Image Analysis: 65

- Data Analysis: 90

- Web Search: 95

ChatGPT:

- Creative: 90

- Coding: 85

- Image Generation: 70

- Image Analysis: 90

- Data Analysis: 60

- Web Search: 65

What Real Users Think (G2 Review Data)

Beyond my own testing, here’s what the G2 Community techies had to say about it.

Satisfaction Ratings

| Category | ChatGPT | Gemini |

|---|---|---|

| G2 Overall Rating | 4.7/5 | 4.4/5 |

| Ease of Use | 97% | 90% |

| Ease of Setup | 96% | 98% |

| Ease of Doing Business | 95% | 95% |

| Natural Conversation | 91% | 92% |

Highest-Rated Features

- ChatGPT excels in: Understanding (92%), Natural conversation (91%), User interaction learning (91%)

- Gemini stands out for: Natural conversation (92%), Reliability (91%), Ease of integration

Lowest-Rated Features

- ChatGPT struggles with: Data security (84%), Content accuracy (85%), Error learning (85%)

- Gemini struggles with: API flexibility (84%), Error learning (86%), Adaptability

Industry Adoption

ChatGPT shows stronger adoption in financial services and education, while Gemini has better penetration in real estate and cybersecurity. Both are widely used in IT services, software development, and marketing.

How To Get Your Hands On It

The best part? Google surprised everyone by making Gemini 2.5 Pro available to all users, not just paying subscribers:

- Free tier: Access the experimental version with some rate limits

- Gemini Advanced: Full access for $19.99/month (Google One AI Premium) with higher usage limits

- Google AI Studio: Available for developers to build with

- Vertex AI: Coming soon for enterprise customers

The “experimental” label might scare some off, but I’ve found it surprisingly stable in my testing. The free tier has some rate limits, but it’s more than enough to see if it fits your workflow.

And sure, there are some bugs, but there’s a tradeoff for everything in life. It’s still worth your time.

Your Questions On Twitter -> My Answers

Is Gemini 2.5 Pro better than ChatGPT?

It depends what you’re using it for. Gemini demolishes the competition on math, research, and data analysis. ChatGPT still reigns supreme for creative writing and coding presentation. My honest take? Use both for what they’re best at.

What makes Gemini 2.5 Pro different from earlier versions?

Good things come in threes.

- Integrated reasoning capabilities (it thinks before responding)

- Massive context window (1M tokens)

- Significantly better performance on complex tasks, especially math and science

The Bottom Line: Should You Switch?

After weeks of testing all these models side-by-side, here’s my honest take:

Use Gemini 2.5 Pro for:

-

Crunching Data: It’s fantastic at processing CSVs, creating visualizations, and identifying patterns in complex datasets.

-

Research Deep-Dives: That 1M token context window is a game-changer for analyzing entire books, papers, or codebases in one go.

-

Getting Real-Time Info: Its Google Search integration delivers consistently fresher, more accurate information than competitors.

-

Math and Technical Problems: For complex technical tasks, Gemini’s reasoning abilities blow away the competition. That 24.4% on MathArena (vs. under 5% for competitors) isn’t just marketing hype.

-

Creating Realistic Images: If you need professional-looking stock photos with human subjects, Gemini with Imagen 3 is your best bet.

Stick with Alternatives for:

-

Creative Writing: Claude still produces resonant content that engages readers with better storytelling flow.

-

Document Summaries: Claude creates better-structured, well-referenced summaries that maintain important context.

-

Reading Complex Images: ChatGPT is significantly better at extracting and transcribing text from visuals like infographics and handwriting.

-

Conversational Experience: Both Claude and ChatGPT just feel more natural and user-friendly when you’re having a back-and-forth conversation. My conversations with Gemini feel like I’m talking to a machine (even though, yes, I kind of am!).

The good news?

Gemini is free, for now.

And what I find most impressive is how far Google has come in just a year. From desperately playing catch-up after ChatGPT’s release, and fumbling the bag in a number of product rollouts, it’s now leading in several key technical capabilities.

This tech behemoth has transformed Gemini into a serious contender. By integrating reasoning directly into their models rather than offering it as a separate mode, they’re setting a new standard for what users should expect from AI assistants.

Ready to hop on board?

Give it a try and see if your experience is as positive as mine has been (so far, at least).