I remember the first time I swung a hammer.

No nails, no wood—just a shiny tool and a lot of enthusiasm.

The result? Absolutely nothing. A hammer without nails is just a paperweight.

Large-language models (LLMs) work the same way. A single, carefully worded prompt—the hammer—isn’t enough. They need nails: background facts, relevant files, previous messages, and a clean memory space to anchor your request.

Supplying those “nails” is context engineering, and it’s the real reason AI can 10× your workflow.

A Good Prompt Isn’t Enough Anymore

Traditional prompt engineering focuses on what you say to the model. It’s useful, but it maxes out fast because the model still lacks everything it needs to reason deeply.

If your chat feels like it’s hallucinating half the time, chances are you’re feeding it isolated prompts with no supporting context.

Enter Context Engineering

Context engineering is the art of what you provide before the call even fires. It bundles three core ingredients:

- Retrieval-Augmented Generation (RAG) – fetching just-in-time documents from a vector database.

- Structured memory and state – letting the model remember only what matters.

- Tool outputs – calculators, APIs, or spreadsheets that drop fresh data straight into the context window.

Give an LLM these ingredients, and you’ve given it nails. The result is longer, more accurate reasoning with fewer hallucinations.

And let’s be honest: No one likes hallucinations.

Vibe Coding: Making Context Feel Natural

A tweet I recently posted sums up where we’re currently at, from a chatbot trend timeline perspective.

Prompt Engineering → Vibe Coding → Context Engineering

“Vibe coding” is my shorthand for blending tech with human tone—making the AI feel like it’s in the room. I’ll open a chat and preload it with:

- A bullet list of project goals

- Key brand guidelines (“We speak in plain English, no jargon”)

- Recent decisions (“Use Tailwind CSS, not Bootstrap”)

Those few lines create a vibe the model can follow for hours. It’s lightweight context, but it makes every answer sound on-brand and on-point.

Give the Model Nails: RAG + Vector Databases

Let’s get practical. Suppose you’re writing product docs. Your script might:

- Accept a topic like “User onboarding.”

- Query a vector database of past docs to pull the five most similar pages. Microsoft Learn walks you through it, step by step.

- Feed those snippets—plus your style guide—into the context window.

Now the model has 90 percent of what it needs before you ever type “Draft a quickstart guide.” Accuracy shoots up, manual editing drops, and you haven’t touched the wording of your prompt at all.

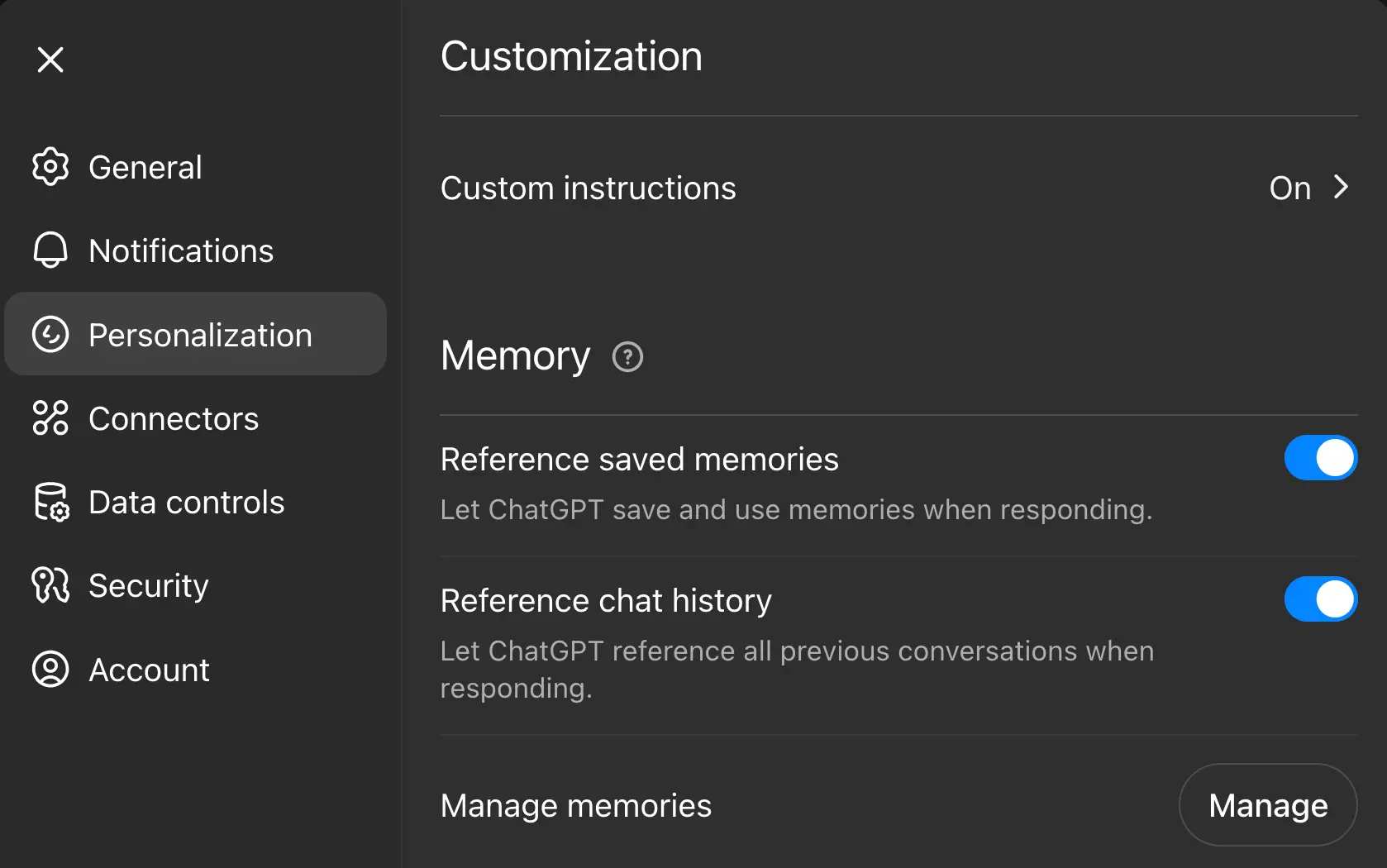

Keep the Workbench Clean: Chat Memory Hygiene

Context isn’t just what you add; it’s what you remove. If your ChatGPT memory is clogged with last month’s trivia, the model will weave that junk into today’s answers.

Quick AI pro tip from my LinkedIn feed:

- Go to Settings → Personalization → Manage Memory.

- Archive or mark throwaway chats as Temporary.

- Keep only the threads that align with your current projects.

Here’s a visual example of where to click to manage memories:

OpenAI confirms that deleting or archiving memories trims clutter and lowers hallucination risk. Plus, ChatGPT’s new memory upgrade—even for free users—makes hygiene more important than ever.

You’d be surprised by what a more clean and concise “memory” can do for your LLM! So make sure to pick and choose what you’re storing, and what is either “temporary” or “archived.”

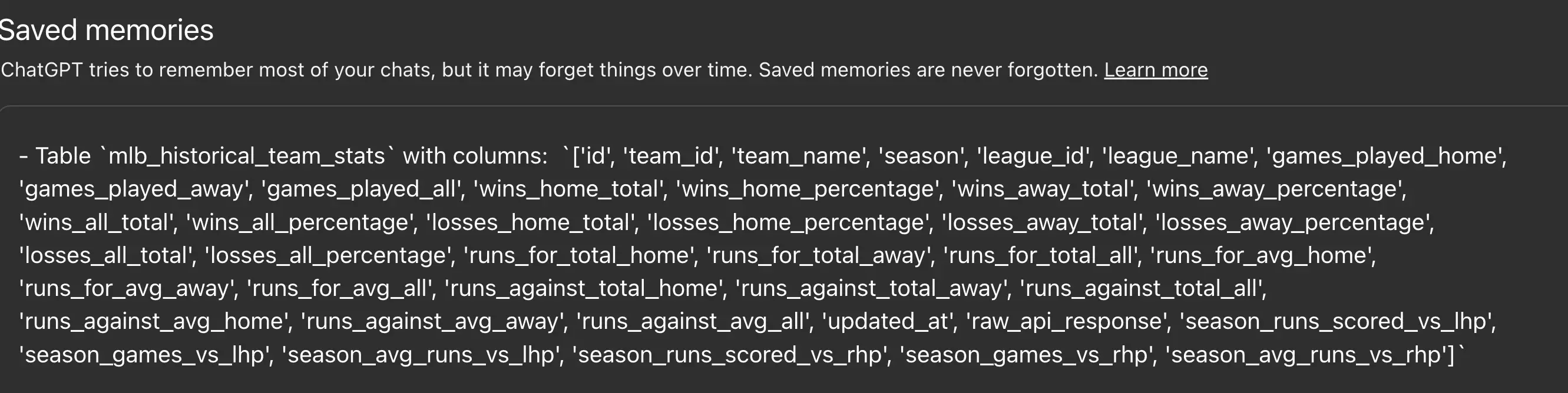

Here’s a recent example from a conversation I had with OpenAI’s o3 model.

A Real-World 10× Workflow

Here’s my daily loop:

- Morning reset – I archive anything unrelated to this week’s sprint.

- Data pull – A Zapier task dumps fresh analytics into Pinecone.

- Write prompt – “Generate a weekly metrics summary for the team.”

- RAG kicks in – The script grabs yesterday’s CSV, last week’s summary, and my brand voice sheet.

- LLM response – Out pops a clean, on-brand report with charts—no extra tweaking.

Time saved: about 45 minutes per report. Multiply that across a month, and context engineering gives me an extra workday to spend on higher-impact tasks.

Slow Down to Speed Up

It feels counter-intuitive, but pausing to gather context—like picking up nails before swinging the hammer—makes everything faster:

- Fewer hallucinations because the model sees the bigger picture.

- Consistent voice because vibe coding sets boundaries.

- Scalable workflows because RAG automates the heavy lifting.

Slow down, load your context, then watch your AI sprint.

TLDR

If you’re still polishing individual prompts, you’re playing the wrong game. Step back, engineer the context, and let the model do real work. The hype is real—just like that hammer, it only matters when you bring the nails.

Never sit down to prompt an LLM without your nails.